Research

Research Interests:

-

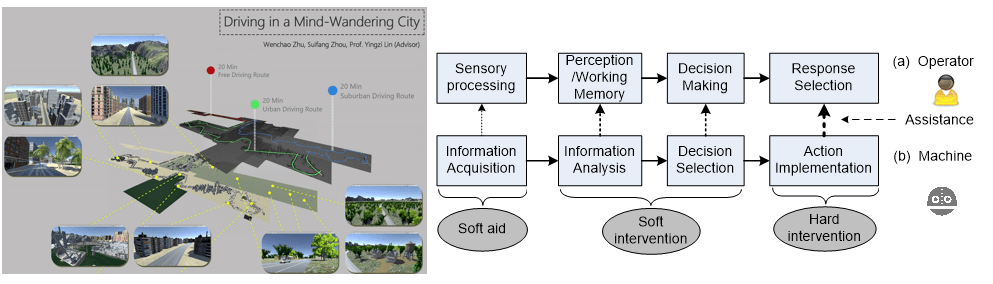

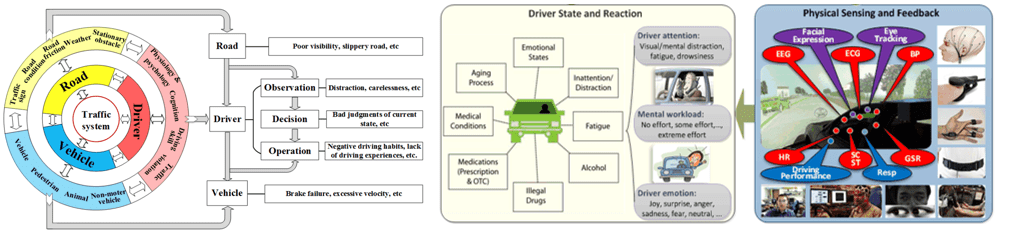

Intelligent Human-Machine Interaction, Human-Machine System Integration & Evaluation

-

Adaptive Human-Machine Interface Design, Noninvasive Intelligent Sensors & Smart Sensing Systems

-

Human State Multimodality Sensing & Information Fusion, Human Assistance System, Human-Friendly Mechatronics

-

Intelligent Transportation Systems & Transportation Safety, Human Factors in Healthcare, Patient Safety, Wearable Sensors & Telemedicine

Funded Research Projects:

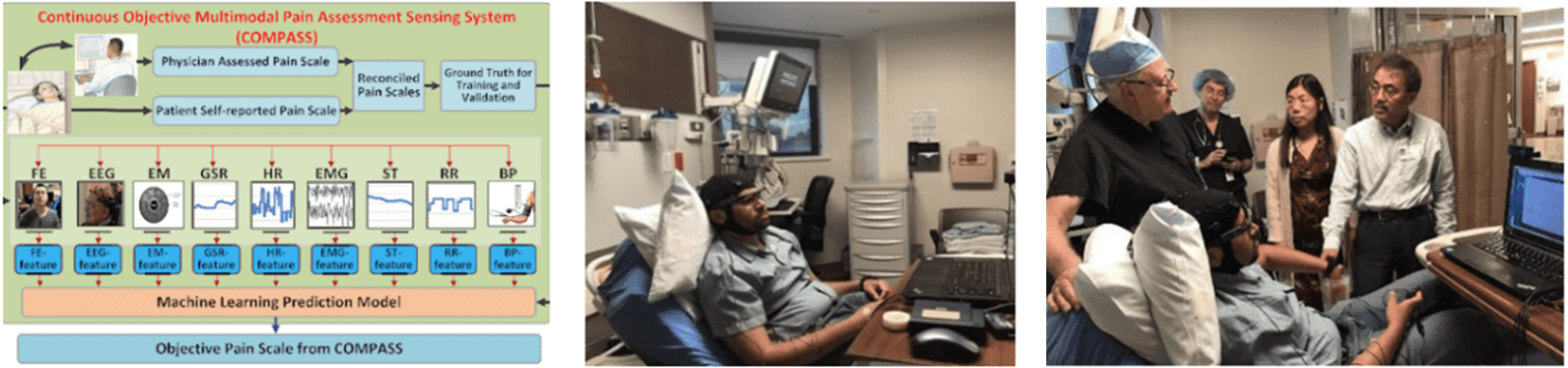

- Collaborative Research: Novel Computational Methods for Continuous Objective Multimodal Pain Assessment Sensing System (COMPASS), NSF award #1838796, in collaboration with the University of Texas at Arlington, and Brigham and Women’s Hospital, Harvard Medical School (Lead PI: Dr. Yingzi Lin).

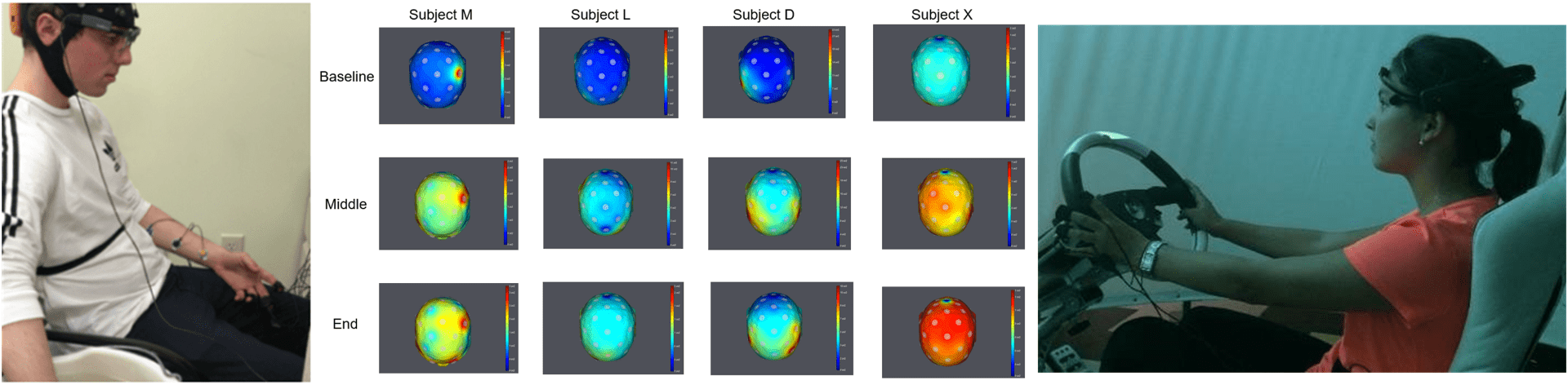

The goal of this project is to build a robust Continuous Objective Multimodal Pain Assessment Sensing System (COMPASS) and a clinical interface capable of generating objective measurements of pain from multimodal physiological signals and facial expressions. The candidate set of input measurements include Facial Expression (FE), Electroencephalography (EEG), Eye movement (EM), Galvanic Skin Response (GSR), Electrocardiogram (ECG), Electromyography (EMG), Skin Temperature (ST), Respiratory Rate (RR), and Blood Pressure (BP). In the absence of objective assessment techniques, clinicians typically use subjective pain scales for pain assessment and management, which has resulted in suboptimal treatment plans, delayed response to patient’s needs, over-prescription of opioids, and drug-seeking behavior among patients. This model will instead allow objective measurements that can be used to significantly improve pain management strategies and advance the field of patient safety.

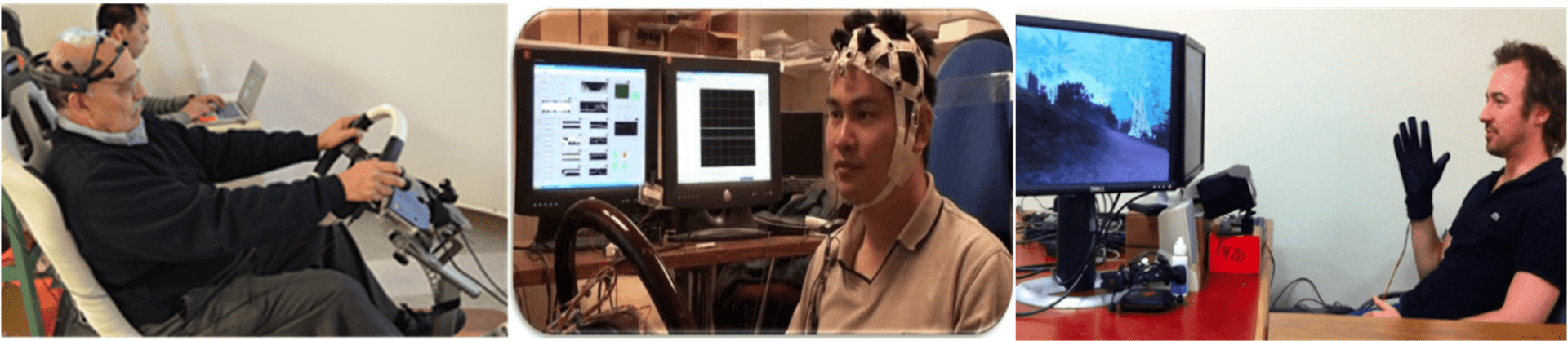

- Decoding Multi-Modal Physiological Response Patterns for Assessing Post-Stroke Cognitive Impairment in VR-based Driving, NIH (Co-PI)

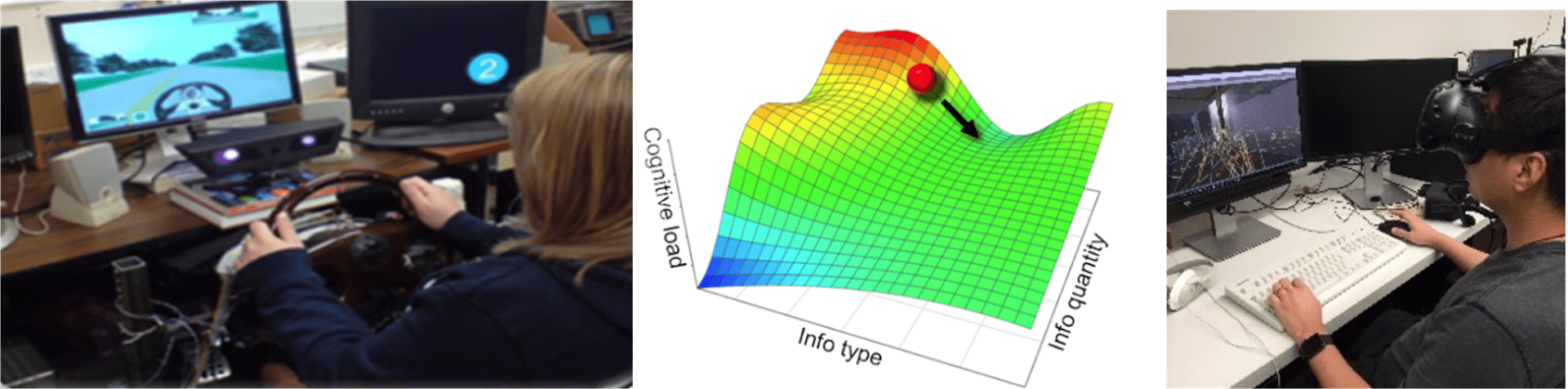

- Cognition-driven Display for Navigation Activities (Cog-DNA): Personalized Spatial Information System Based on Information Personality of Firefighters, NIST (Co-PI)

- Collaborative research: Less is More: Personalized Spatial Information System Based on Real-Time Cognitive Load for First Responders in Emergency Indoor Wayfinding, NSF (Co-PI)

- I-Corps: Thin Film Cardiac Sensor, NSF (PI)

- Towards Human Friendly Mechatronics: Cognitive Detection and Bio-feedback Control in Human-Robot Interaction (PI)

- Integrated Individualized Modeling towards Cognitive Control of Human-Machine Systems, NSF (PI)

- CAREER: Bridging Cognitive Science and Sensor Technology: Non-intrusive and Multi-modality Sensing in Human-Machine Interactions, NSF (PI)

- Driver Drowsiness Recognition Using Eye-tracking and Physiological Technologies (PI)

- CNT-Integrated Sensing System for Human State Detection, NSF (PI)

- Evaluating Usability and Driver Distraction (Co-PI)

- Detection and Diagnosis of Driver Characteristics and States (PI)

- Investigation of Novel Technology for Intelligent Interface (PI)

- Modeling and Simulation Environment for Networked Critical Infrastructure, Natural Sciences and Engineering Research Council of Canada (NSERC) and PSPEC (Public Safety and Emergency Preparedness Canada) (Co-PI)

- Integrated Design of Function, Usability and Aesthetics (PI)

- A Non-intrusive Eyegaze Instrument for Real Time Operator’s Attention Tracking, Natural Sciences and Engineering Research Council (NSERC) of Canada, Research Tools and Instruments Grant (PI)

- Intelligent Adaptive Interface: Theory and Application in Vehicle, Natural Sciences and Engineering Research Council (NSERC) of Canada, Discovery Grant (PI)

Sponsors:

We could not keep making research progress without the help of our sponsors. A sincere thanks to their consecutive support! Please click following pictures for more information by visiting their official websites.

We could not keep making research progress without the help of our sponsors. A sincere thanks to their consecutive support! Please click following pictures for more information by visiting their official websites.

©Yingzi Lin All rights reserved